Interactive Language Understanding with Multiple Timescale Recurrent Neural Networks

Stefan Heinrich, Stefan Wermter

Conference: Proceedings of the 24th International Conference on Artificial Neural Networks (ICANN2014), pp. 193-200, Sep 2014

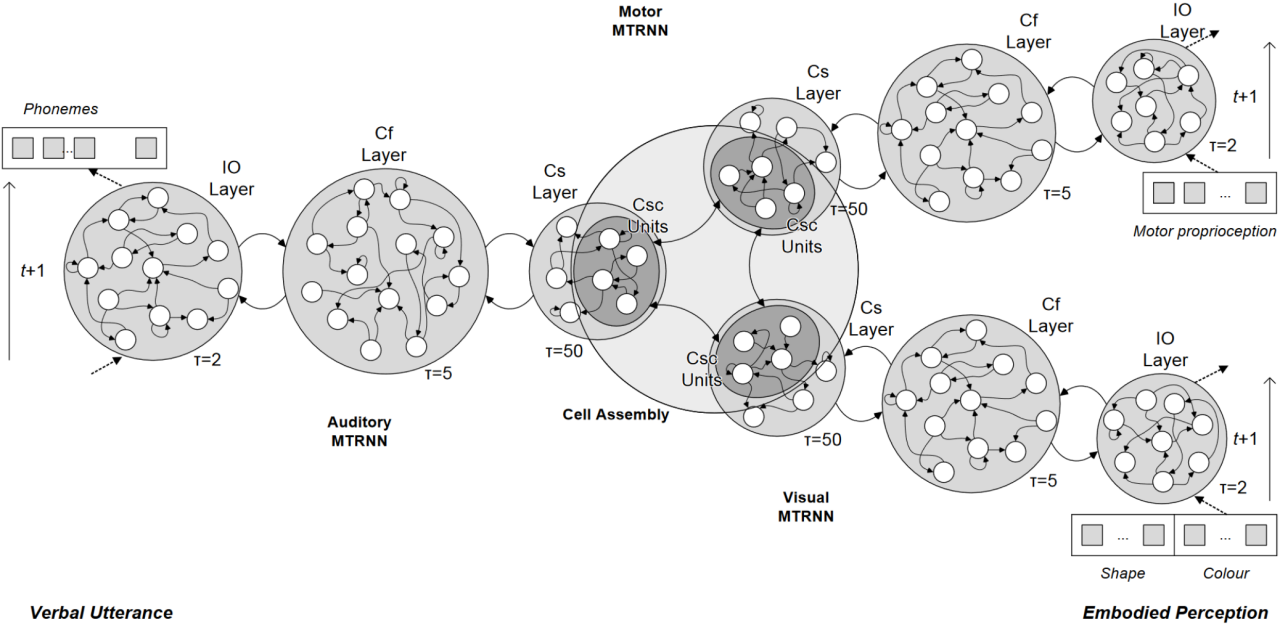

Abstract: Natural language processing in the human brain is complex and dynamic. Models for understanding, how the brain’s architecture acquires language, need to take into account the temporal dynamics of verbal utterances as well as of action and visual embodied perception. We propose an architecture based on three Multiple Timescale Recurrent Neural Networks (MTRNNs) interlinked in a cell assembly that learns verbal utterances grounded in dynamic proprioceptive and visual information. Results show that the architecture is able to describe novel dynamic actions with correct novel utterances, and they also indicate that multi-modal integration allows for a disambiguation of concepts.