Adaptive and Variational Continuous Time Recurrent Neural Networks

Stefan Heinrich, Tayfun Alpay, Stefan Wermter

Conference: Proceedings of the Joint IEEE International Conference on Development and Learning and on Epigenetic Robotics (ICDL-EpiRob2018), pp. 13-18, Tokyo, Japan, Sep 2018

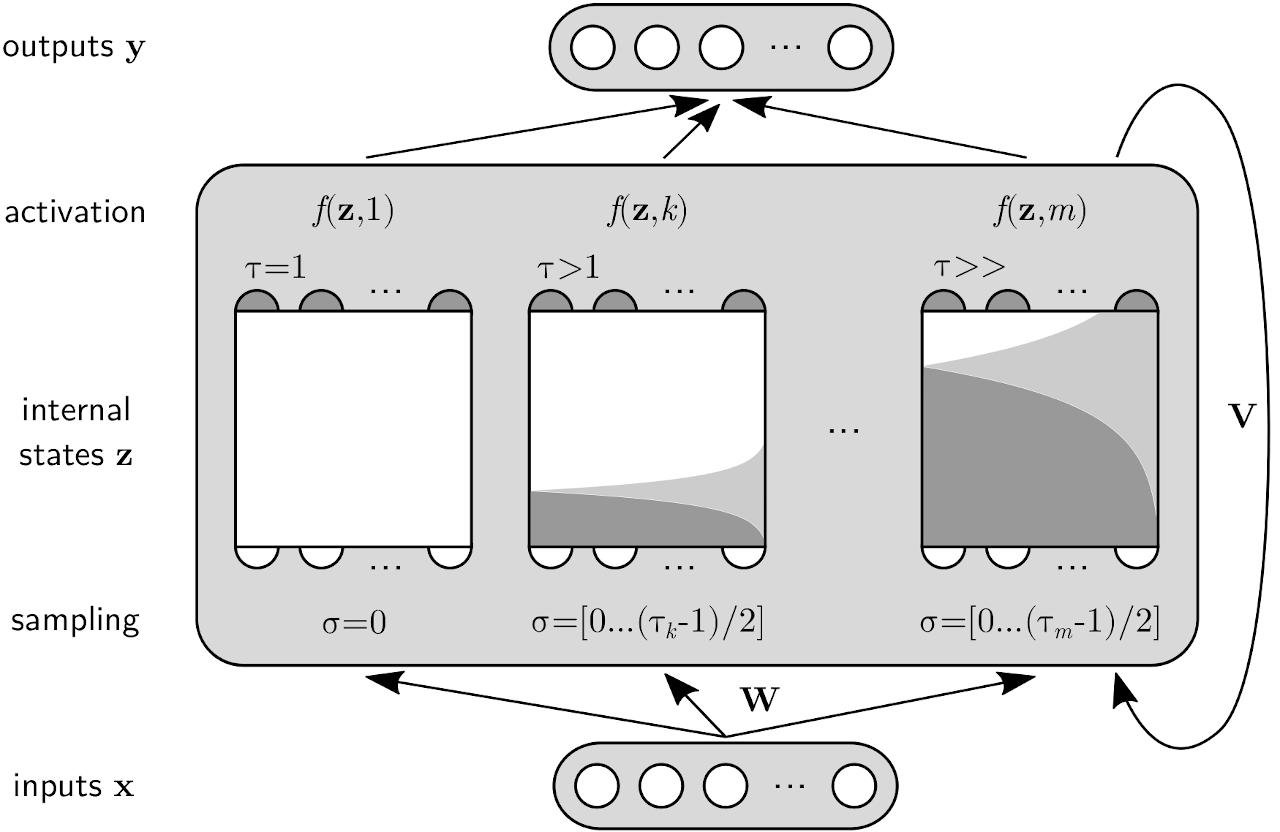

Abstract: In developmental robotics, we model cognitive processes, such as body motion or language processing, and study them in natural real-world conditions. Naturally, these sequential processes inherently occur on different continuous timescales. Similar as our brain can cope with them by hierarchical abstraction and coupling of different processing modes, computational recurrent neural models need to be capable of adapting to temporally different characteristics of sensorimotor information. In this paper, we propose adaptive and variational mechanisms that can tune the timescales in Continuous Time Recurrent Neural Networks (CTRNNs) to the characteristics of the data. We study these mechanisms in both synthetic and natural sequential tasks to contribute to a deeper understanding of how the networks develop multiple timescales and represent inherent periodicities and fluctuations. Our findings include that our Adaptive CTRNN (ACTRNN) model self-organises timescales towards both representing short-term dependencies and modulating representations based on long-term dependencies during end-to-end learning