Cross-modal emotion recognition: How similar are patterns between DNNs and human fMRI data?

Christoph Korn, Sasa Redzepovic, Jan Gläscher, Matthias Kerzel, Pablo Barros, Stefan Heinrich, Stefan Wermter

Conference: Proceedings of the ICLR2021 Workshop on How Can Findings About The Brain Improve AI Systems? (Brain2AI@ICLR2021), Virtual, Earth, May 2021

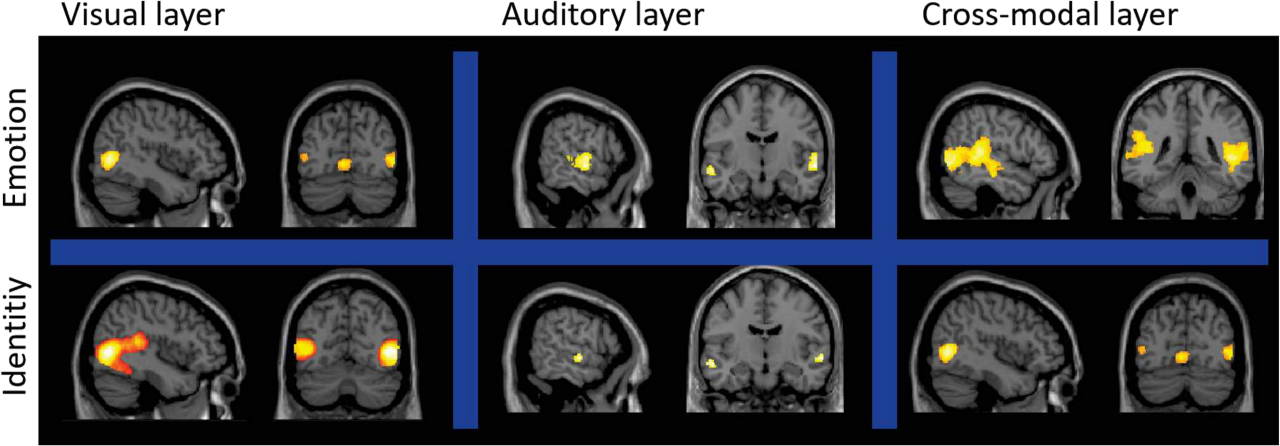

Abstract: Deep neural networks (DNNs) have reached human-like performance in many perceptual classification tasks including emotion recognition from human faces and voices. Can the patterns in layers of DNNs inform us about the processes in the human brain and vice versa? Here, we set out to address this question for cross-modal emotion recognition. We obtained functional magnetic resonance imaging (fMRI) data from 43 human participants presented with 72 audio-visual stimuli of actors/actresses depicting six different emotions. The same stimuli were classified with high accuracy in our pre-trained DNNs built according to a cross-channel convolutional architecture. We used supervised learning to classify four properties of the audio-visual stimuli: The depicted emotion, the identity of the actors/actresses, their gender, and the spoken sentence. Inspired by recent studies using representational similarity analyses (RSA) for uni-modal stimuli, we assessed the similarities between the layers of the DNN and the fMRI data. As hypothesized, we identified gradients in pattern similarities along the different layers of the auditory, visual, and cross-modal channels of the DNNs. These gradients in similarities varied in expected ways between the four classification regimes: Overall, the DNNs relied more on the visual arm. For classifying spoken sentences, the DNN relied more on the auditory arm. Crucially, we found similarities between the different layers of the DNNs and the fMRI data in search-light analyses. These pattern similarities varied along the brain regions involved in processing auditory, visual, and cross-modal stimuli. In sum, our findings highlight that emotion recognition from cross-modal stimuli elicits similar patterns in DNNs and neural signals. In a next step, we aim to assess how these patterns differ, which may open avenues for improving DNNs by incorporating patterns derived from the processing of cross-modal stimuli in the human brain.