Adaptive Learning of Linguistic Hierarchy in a Multiple Timescale Recurrent Neural Network

Stefan Heinrich, Cornelius Weber, Stefan Wermter

Conference: Proceedings of the 22nd International Conference on Artificial Neural Networks (ICANN2012), pp. 555-562, Sep 2012

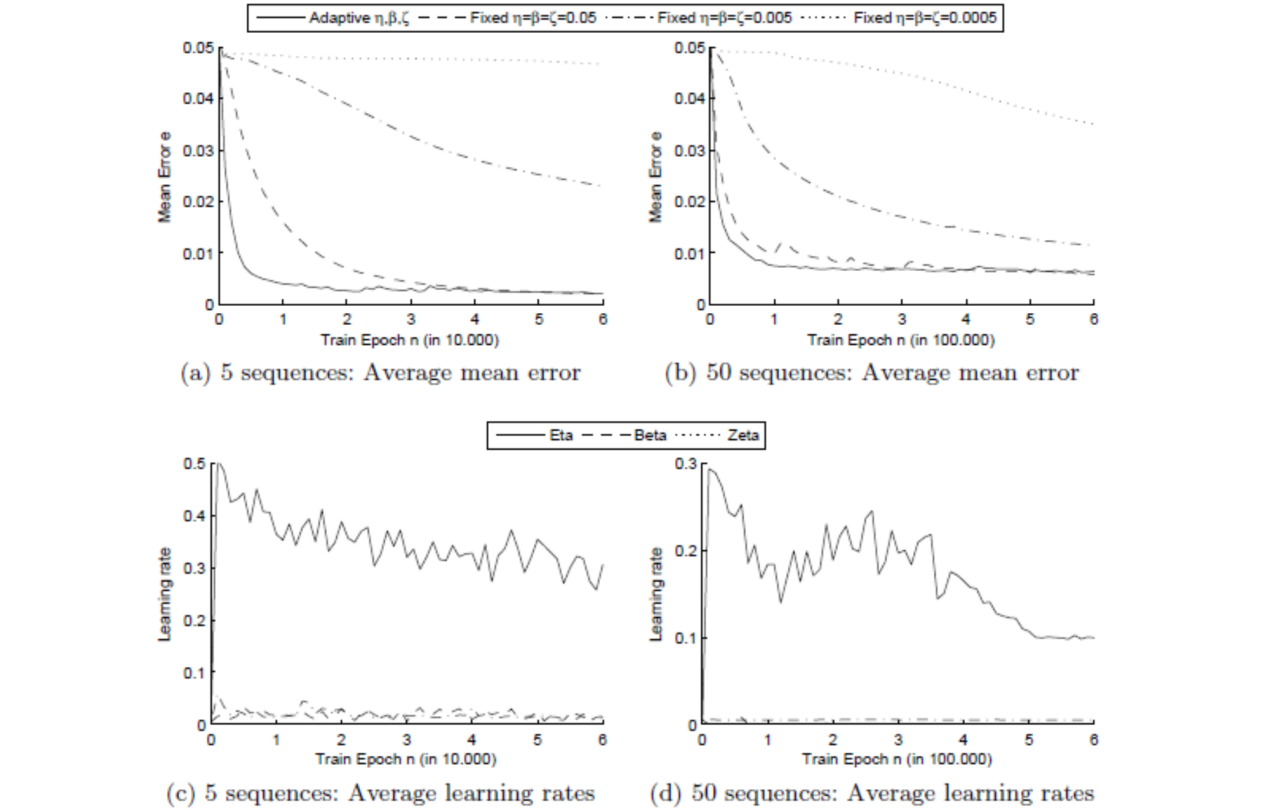

Abstract: Recent research has revealed that hierarchical linguistic structures can emerge in a recurrent neural network with a sufficient number of delayed context layers. As a representative of this type of network the Multiple Timescale Recurrent Neural Network (MTRNN) has been proposed for recognising and generating known as well as unknown linguistic utterances. However the training of utterances performed in other approaches demands a high training effort. In this paper we propose a robust mechanism for adaptive learning rates and internal states to speed up the training process substantially. In addition we compare the generalisation of the network for the adaptive mechanism as well as the standard fixed learning rates finding at least equal capabilities.