Understanding Auditory Representations of Emotional Expressions with Neural Networks

Iris Wieser, Pablo Barros, Stefan Heinrich, Stefan Wermter

Journal: Neural Computing and Applications, Springer, Dec 2018

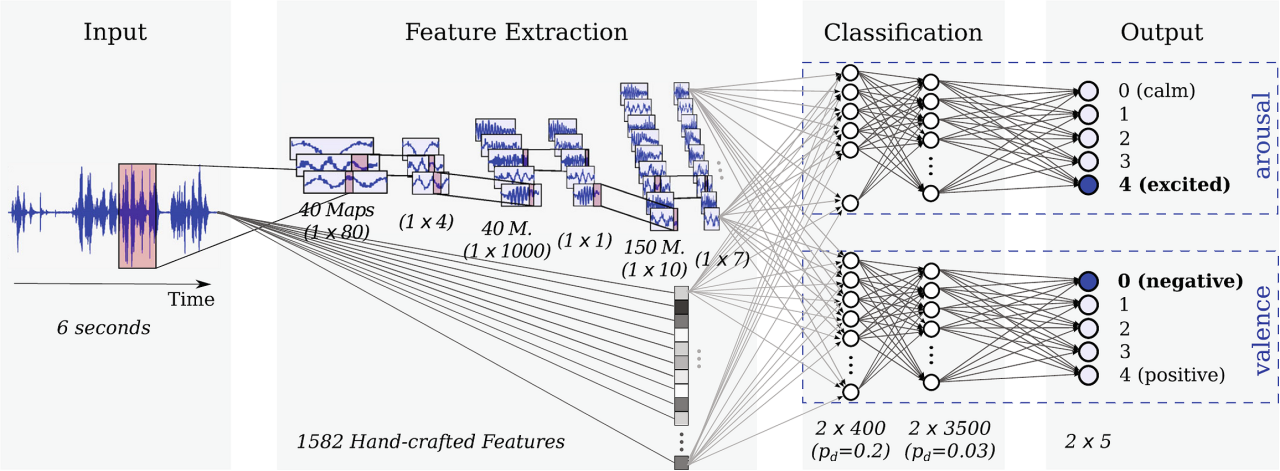

Abstract: In contrast to many established emotion recognition systems, convolutional neural networks do not rely on handcrafted features to categorize emotions. Although achieving state-of-the-art performances, it is still not fully understood what these networks learn and how the learned representations correlate with the emotional characteristics of speech. The aim of this work is to contribute to a deeper understanding of the acoustic and prosodic features that are relevant for the perception of emotional states. Firstly, an artificial deep neural network architecture is proposed that learns the auditory features directly from the raw and unprocessed speech signal. Secondly, we introduce two novel methods for the analysis of the implicitly learned representations based on data-driven and network-driven visualization techniques. Using these methods, we identify how the network categorizes an audio signal as a two-dimensional representation of emotions, namely valence and arousal. The proposed approach is a general method to enable a deeper analysis and understanding of the most relevant representations to perceive emotional expressions in speech.