Integrating Image-based and Knowledge-based Representation Learning

Ruobing Xie, Stefan Heinrich, Zhiyuan Liu, Cornelius Weber, Yuan Yao, Stefan Wermter, Maosong Sun

Journal: IEEE Transactions on Cognitive and Developmental Systems, vol. 12, no. 2, IEEE, Apr 2019

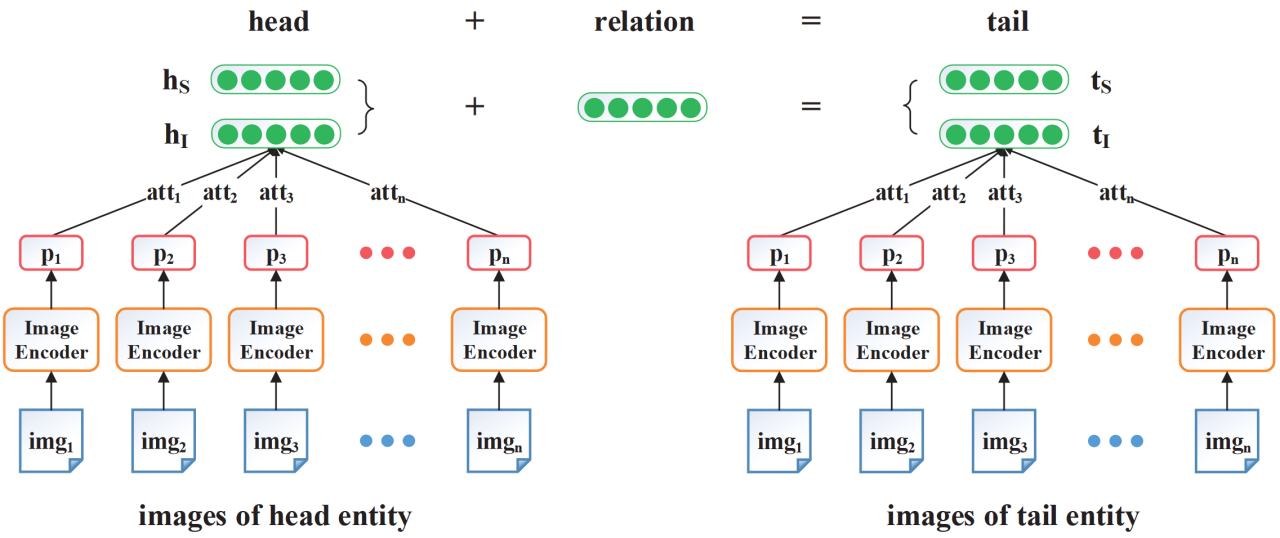

Abstract: A variety of brain areas is involved in language understanding and generation, accounting for the scope of language that can refer to many real-world matters. In this work, we investigate how regularities among real-world entities impact on emergent language representations. Specifically, we consider knowledge bases, which represent entities and their relations as structured triples, and image representations, which are obtained via deep convolutional networks. We combine these sources of information to learn representations of an Image-based Knowledge Representation Learning model (IKRL). An attention mechanism lets more informative images contribute more to the image-based representations. Evaluation results show that the model outperforms all baselines on the tasks of knowledge graph completion and triple classification. In analysing the learned models we found that the structure-based and image-based representations integrate different aspects of the entities and the attention mechanism provides robustness during learning.